AI Chips Nvidia, Amazon, Google, Microsoft, Meta Dominate

AI chips Nvidia Amazon Google Microsoft Meta are revolutionizing the tech landscape. These companies are at the forefront of developing cutting-edge AI hardware, driving innovation in areas like data centers, machine learning, and more. This deep dive explores the current state of the market, competitive strategies, technological advancements, and future implications of these powerful chips.

The market is fiercely competitive, with each company vying for dominance through unique features and applications. This blog post provides a comprehensive overview of the current state of play, highlighting the strengths, weaknesses, and future prospects of each player.

Overview of AI Chips Market

The global AI chip market is experiencing explosive growth, fueled by the increasing demand for powerful computing capabilities to drive artificial intelligence applications. Leading tech giants are aggressively investing in this sector, recognizing the strategic importance of AI chips for future innovation and dominance. This market is no longer a niche area but a critical component in the development of many modern technologies.

Current State of the AI Chip Market, Ai chips nvidia amazon google microsoft meta

The AI chip market is currently dominated by a handful of major players, each vying for a larger share of the rapidly expanding market. Competition is intense, with companies constantly innovating to enhance performance, reduce costs, and cater to diverse applications. This dynamic environment is characterized by both rapid technological advancements and substantial investment.

Major Players and Market Shares

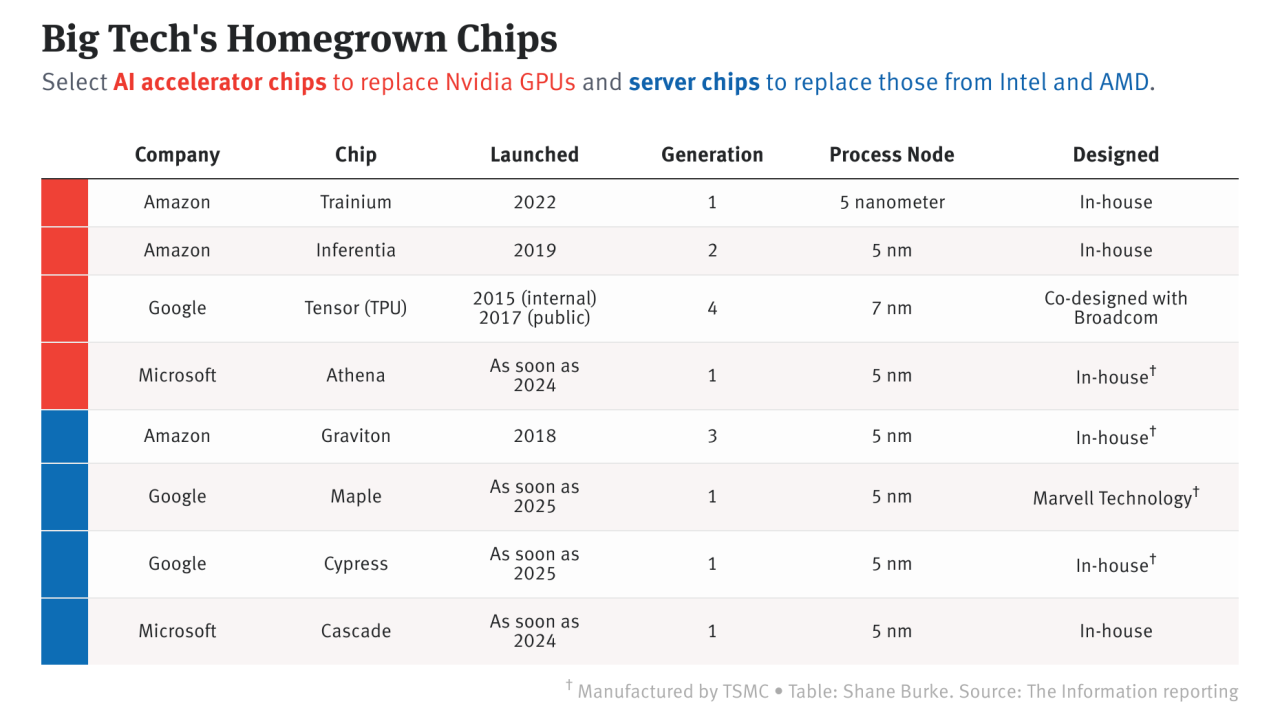

Nvidia, Amazon, Google, Microsoft, and Meta are the key players shaping the AI chip landscape. While precise market share data is often proprietary, Nvidia generally holds a significant portion of the market due to its strength in GPU-based solutions for AI workloads. Amazon Web Services, Google’s Tensor Processing Units (TPUs), and Microsoft’s Azure AI solutions are also significant players, catering to cloud-based AI needs.

Meta’s involvement is focused on specialized AI chips for its own data centers. The specific percentages vary, depending on the source and methodology used to calculate them.

AI chips from Nvidia, Amazon, Google, Microsoft, and Meta are increasingly important, especially as they power the next generation of tech. A key area of application is the burgeoning electric vehicle (EV) sector in China, particularly in Hefei, where local economic growth is tied to these advancements. The rapid development of the EV industry in places like china hefei ev city economy is directly influenced by the availability and efficiency of these AI-powered chips, creating a ripple effect on the global tech landscape.

Ultimately, the demand for these cutting-edge AI chips will only continue to rise.

Key Technological Advancements

Several key technological advancements are driving the growth of the AI chip market. These include:

- Specialized Architectures: Companies are developing specialized architectures, like TPUs, designed to optimize performance for specific AI tasks, such as image recognition or natural language processing. This specialized approach often yields superior performance compared to general-purpose processors.

- Enhanced Chip Design: Continuous improvements in chip design, including advancements in transistor scaling, memory integration, and interconnection technologies, result in faster and more energy-efficient AI chips.

- Customizable Platforms: Customizable AI platforms enable users to tailor chip configurations to their specific needs, allowing for optimal performance and efficiency for varied applications.

- Hardware Acceleration: Hardware acceleration for key AI algorithms and tasks is a significant advancement, directly contributing to the speed and efficiency of AI processing.

Predicted Growth Trajectory

The AI chip market is expected to continue its upward trajectory, driven by factors like the proliferation of AI applications across diverse sectors, from healthcare to finance. The demand for faster, more efficient AI processing will likely accelerate this growth. Consider, for instance, the increasing reliance on AI for autonomous vehicles, which necessitates substantial computational power for real-time decision-making.

Key Features and Specifications of AI Chips

| Company | Chip Type | Key Features | Specifications (Example) |

|---|---|---|---|

| Nvidia | GPUs (e.g., H100) | High parallel processing, versatile architecture | High floating-point throughput, large memory capacity |

| Amazon | Custom chips (e.g., Trainium) | Optimized for cloud-based AI workloads, high efficiency | Scalable architecture, low power consumption |

| TPUs (e.g., TPU v4) | Specialized for machine learning tasks, high performance | High tensor throughput, specialized hardware | |

| Microsoft | Custom chips (e.g., for Azure) | Optimized for cloud infrastructure, broad compatibility | High scalability, integrated with Azure services |

| Meta | Custom chips (e.g., for data centers) | Optimized for specific needs of large-scale AI models | High-bandwidth memory, low latency |

Competitive Landscape Analysis

The AI chip market is fiercely contested, with industry giants like Nvidia, Amazon, Google, Microsoft, and Meta vying for dominance. Each company possesses unique strengths and weaknesses, and their competitive strategies vary significantly. Understanding these nuances is crucial to grasping the future trajectory of this rapidly evolving sector.The intense competition drives innovation, pushing companies to develop increasingly powerful and specialized AI chips.

This constant pressure results in a dynamic landscape where the leading players must constantly adapt and refine their offerings to maintain their competitive edge. This analysis will delve into the specifics of each company’s strategies, highlighting key differentiators and potential future collaborations.

Strengths and Weaknesses of AI Chip Offerings

Each company’s AI chip portfolio exhibits a unique blend of strengths and weaknesses. Nvidia, for instance, has a strong reputation for high-performance GPUs, particularly in areas like deep learning and graphics processing. However, their focus on specific application areas might limit their appeal to other sectors. Amazon’s AWS cloud platform, with its AI-focused services, offers a powerful ecosystem, but their chip design might be less specialized compared to Nvidia’s.

Google’s TensorFlow ecosystem and broad range of AI tools provide a strong advantage, but their chip designs might not have the same level of raw performance as Nvidia’s. Microsoft’s Azure cloud platform, with its growing AI capabilities, complements their broader software ecosystem, but the extent of their chip design efforts might be less pronounced. Meta’s focus on specialized AI chips for its own data centers and social media applications gives them a clear edge in these areas, but their presence in the broader market may be limited.

Competitive Strategies Employed

Companies employ various strategies to gain market share. Nvidia, with its strong focus on high-performance GPUs, leverages partnerships and collaborations to expand its reach. Amazon emphasizes its integrated cloud platform, offering AI services and chips optimized for cloud deployments. Google’s strategy revolves around open-source software and deep integration with its own cloud and AI tools. Microsoft prioritizes its Azure cloud platform and its broader software ecosystem, aiming for a comprehensive AI solution.

Meta’s approach centers around specialized chips designed for specific tasks within its own data centers and applications.

Potential Collaborations and Partnerships

The AI chip market is not solely about direct competition. Potential collaborations and partnerships between companies are inevitable. For example, partnerships could involve joint research initiatives or co-development of specific AI chip architectures, leading to innovations that no single company could achieve alone. Nvidia’s past partnerships with various tech companies are a clear indication of this trend.

Cross-platform compatibility and standardization could also benefit from collaborations, allowing developers to use AI chips across various platforms without significant re-engineering.

Key Differentiating Factors

Different AI chips offer varying advantages. Nvidia excels in raw performance and broad application areas, while Google and Microsoft emphasize their cloud integrations and ecosystems. Amazon prioritizes cloud-based AI services and optimization for its cloud environment. Meta prioritizes its specific needs and internal applications, while its broader market impact remains to be seen. These distinctions highlight the diverse approach each company takes to address the multifaceted demands of the AI chip market.

Price Points and Target Markets

| Company | Price Point (Estimated) | Target Market |

|---|---|---|

| Nvidia | High-end, varies by product | Data centers, high-performance computing, professional graphics |

| Amazon | Mid-range, optimized for cloud deployments | Cloud providers, AI-focused applications in the cloud |

| Mid-range, integrated with cloud services | Cloud providers, AI-focused applications in the cloud | |

| Microsoft | Mid-range, integrated with Azure cloud services | Cloud providers, AI-focused applications in the cloud |

| Meta | High-end, optimized for specific applications | Data centers, specialized applications within social media and related fields |

Note: Price points are estimations and can vary based on specific chip configurations and features. Target markets are general categories and can overlap.

Applications and Use Cases

AI chips are rapidly transforming industries, from healthcare to finance, and their impact is only set to grow. These specialized processors, designed specifically for accelerating artificial intelligence tasks, are powering a new wave of innovation and efficiency. Their ability to handle complex computations at scale is driving breakthroughs in image recognition, natural language processing, and machine learning, creating new possibilities for businesses and consumers alike.The applications of AI chips extend far beyond the realm of research labs.

They are now being integrated into everyday devices, software, and systems, demonstrating the practical value and potential of this technology. This integration is enabling businesses to automate processes, improve decision-making, and enhance customer experiences, creating a more efficient and intelligent future.

Image Recognition Applications

AI chips are central to advancements in image recognition. Their ability to process vast amounts of visual data rapidly is essential for tasks like object detection, facial recognition, and medical image analysis. In healthcare, for example, AI chips are used to identify cancerous cells in medical scans with increased accuracy and speed. This translates to earlier diagnoses, more effective treatment plans, and improved patient outcomes.

AI chips from Nvidia, Amazon, Google, Microsoft, and Meta are all vying for a piece of the market, but the recent news of Arthur Smith being hired as the Steelers’ offensive coordinator arthur smith hired steelers offensive coordinator is a compelling development. It highlights the growing intersection of tech and sports, mirroring the advancements in AI chip technology that power these companies’ operations.

The competition in the AI chip arena will undoubtedly heat up even further.

Autonomous vehicles also rely heavily on image recognition capabilities powered by these chips, enabling them to perceive and react to their environment.

Natural Language Processing Applications

Natural language processing (NLP) tasks, such as language translation, sentiment analysis, and chatbots, are becoming increasingly important in various sectors. AI chips are essential for enabling these capabilities, enabling seamless interactions between humans and machines. In customer service, AI chips power chatbots that provide instant support and answer customer queries. In financial institutions, NLP is used for fraud detection and risk assessment.

Machine Learning Applications

Machine learning algorithms are crucial for tasks like predictive modeling, pattern recognition, and data analysis. AI chips significantly accelerate these algorithms, enabling faster and more accurate results. In finance, for example, AI chips can process vast amounts of financial data to identify market trends and predict future outcomes. This ability to process and analyze data at scale is transforming many industries, leading to more informed decisions and better outcomes.

Industry-Specific Applications

- Healthcare: AI chips are used for medical image analysis, drug discovery, and personalized medicine. Their ability to process massive datasets allows for quicker and more accurate diagnoses. This translates into improved patient outcomes and potentially lower healthcare costs.

- Finance: AI chips are used for fraud detection, risk assessment, algorithmic trading, and customer service chatbots. Their high processing power allows financial institutions to analyze vast amounts of data to identify patterns and mitigate risks.

- Transportation: AI chips power self-driving cars and optimize traffic flow. The ability to process real-time data enables vehicles to navigate complex environments and respond to changing conditions. This leads to safer and more efficient transportation systems.

Company-Specific Use Cases (Illustrative Table)

| Company | AI Chip | Industry Applications | Benefits | Unique Use Cases |

|---|---|---|---|---|

| Nvidia | Nvidia GPUs | Image recognition, machine learning, gaming | High performance, scalability, energy efficiency | Specialized AI training and inference platforms; used in data centers and high-end PCs |

| Amazon | AWS Inferentia | Machine learning, large language models | High throughput, low latency | Optimized for cloud-based AI workloads; used in cloud services for customers |

| TPUs | Machine learning, search, advertising | High performance, energy efficiency | Designed for specialized AI tasks, like large language models; used in Google Cloud services | |

| Microsoft | Azure NPU | Machine learning, AI research, cloud services | Low latency, high throughput | Designed for cloud-based AI tasks; used for various applications |

| Meta | Specialized AI chips | Image recognition, social media algorithms | Optimized for specific tasks | Focused on tasks relevant to social media; used for image and video processing |

Technological Advancements

The relentless pursuit of faster, more efficient AI chips is driving innovation across various fronts. Researchers are constantly pushing boundaries in design, manufacturing, and architectural approaches, leading to significant improvements in processing power, energy efficiency, and specialized functionalities. These advancements are crucial for unlocking the full potential of AI applications, from complex scientific simulations to everyday tasks.

Ongoing Research and Development Efforts

Extensive research and development efforts are focused on optimizing AI chip architectures. This involves exploring novel materials, design paradigms, and fabrication processes to achieve higher performance and lower power consumption. Researchers are examining novel transistor structures, exploring 3D stacking techniques, and developing more sophisticated interconnect technologies. These advancements aim to enhance the speed and efficiency of data processing, crucial for handling the massive datasets required by modern AI algorithms.

Role of Specialized Architectures

Specialized architectures play a critical role in boosting AI chip performance. These architectures are tailored to specific AI tasks, like deep learning, enabling them to process data more efficiently. For instance, tensor cores in NVIDIA GPUs are specifically designed for matrix operations common in deep learning models. Similarly, specialized neural network processors (NNPs) are being developed to optimize for particular types of neural network computations.

This targeted design approach leads to significant improvements in throughput and reduced energy consumption.

Emerging Trends in AI Chip Design and Manufacturing

Several key trends are shaping the future of AI chip design and manufacturing. One prominent trend is the increasing use of heterogeneous integration, which combines different types of chips (e.g., CPUs, GPUs, and specialized AI accelerators) on a single chip. This approach allows for better utilization of resources and enhanced processing capabilities. Furthermore, advancements in chip packaging are crucial, enabling denser integration and improved communication between components.

Lastly, exploring new materials and fabrication techniques, like extreme ultraviolet lithography, promises to increase chip density and performance.

Latest Advancements in AI Chip Technologies

The latest advancements in AI chip technologies span across various dimensions. Nvidia’s latest GPUs, for example, incorporate significant improvements in tensor core performance, allowing for faster training of large language models. Google’s TPU chips are constantly being refined to achieve higher efficiency in handling specific machine learning tasks. Furthermore, advancements in specialized AI processors are driving innovation in fields like image recognition and natural language processing.

Key Technological Innovations by Company

| Company | Key Technological Innovations |

|---|---|

| Nvidia | Advanced Tensor Cores, improved CUDA architecture, new memory subsystems, and heterogeneous integration. |

| Amazon | Custom-designed inference processors for specific workloads (e.g., recommendation systems), optimized memory architectures, and specialized hardware for image and video processing. |

| TPUs (Tensor Processing Units) with specialized architectures for machine learning tasks, efficient memory management, and ongoing development of new hardware architectures tailored for particular neural network types. | |

| Microsoft | Specialized AI accelerators integrated into their server hardware, optimized software stacks for AI workloads, and development of customized processors for specific AI applications. |

| Meta | Focus on efficient hardware for large-scale image and video processing, specialized hardware for neural network inference, and development of hardware that can handle the complex demands of virtual reality. |

Future of AI Chips

The relentless march of AI is inextricably linked to the evolution of specialized hardware. AI chips, designed for the specific demands of machine learning tasks, are poised to reshape computing as we know it. Their increasing sophistication promises faster processing, lower energy consumption, and ultimately, more powerful and accessible AI capabilities.The future of AI chips hinges on their ability to keep pace with the ever-growing complexity of AI algorithms.

This necessitates continuous innovation in architecture, materials, and manufacturing processes. As AI models become more intricate, so too must the chips that power them, leading to a dynamic and rapidly evolving landscape.

Predicted Performance and Capabilities

The next generation of AI chips will be characterized by significant advancements in processing power and energy efficiency. Increased parallelism and specialized hardware will enable faster training and inference of complex AI models. This is particularly important for real-time applications, such as autonomous vehicles and robotics, where quick responses are crucial.

Evolution of AI Chip Architectures

AI chip architectures are constantly adapting to meet the specific needs of different AI tasks. We can anticipate the emergence of more specialized architectures, tailored for particular applications. For example, chips optimized for image recognition might have vastly different designs compared to those focused on natural language processing. Heterogeneous integration, combining different processing units on a single chip, is another key trend.

This approach leverages the strengths of various architectures, creating a more versatile and powerful solution. Furthermore, neuromorphic computing, inspired by the human brain, is expected to play a significant role in future AI chip design.

Comparison of Future AI Chip Performance

| Company | Predicted Future Performance (Estimated Inference Speed) | Key Capabilities |

|---|---|---|

| Nvidia | Maintaining its leadership position with advancements in Tensor Cores and specialized architectures, potentially exceeding 100 teraflops in inference speed for certain tasks. | Strong in graphics processing and general-purpose computing, adapting for AI workloads. |

| Amazon | Likely to focus on optimized solutions for specific cloud workloads, with speed enhancements for common AI tasks. Estimated inference speed may be highly competitive with other companies. | Strong cloud infrastructure, leveraging AI for internal processes. |

| Expect continued advancements in TPU architecture, targeting high-performance computing with optimized power efficiency. Potential for inference speeds above 50 teraflops. | Strong in research and development, likely to prioritize specialized architectures for specific applications. | |

| Microsoft | Integrating AI capabilities into existing hardware and software ecosystems. Inference speed will likely be competitive, focusing on efficient execution across diverse AI models. | Strong in software, focusing on broad compatibility and efficient utilization of resources. |

| Meta | Likely to prioritize AI chips for its massive social media data processing and other internal needs. Performance is expected to be tailored for large-scale image and video processing. | Significant data volumes and specific requirements for AI-driven social media features. |

Potential Challenges and Opportunities

The AI chip market presents significant opportunities for innovation and growth. However, challenges remain, such as the need for specialized talent, the complexity of design, and the high cost of development. Addressing these challenges will be critical to fostering a robust and competitive market. Furthermore, maintaining energy efficiency in high-performance AI chips will be crucial for wider adoption and sustainability.

AI chips from Nvidia, Amazon, Google, Microsoft, and Meta are rapidly evolving, pushing the boundaries of what’s possible in computing. These advancements are often fueled by a desire to stay ahead of the competition, and a lot of speculation surrounds the future of these companies and their investments. While the tech world watches closely, it’s interesting to see how these developments intersect with other areas, like celebrity news.

For example, recent reports on stars Harley Johnston, Oettinger, and Benn are fascinating to see in relation to the big picture of AI chip development. Ultimately, these companies and their AI chips will likely continue to shape the future of technology.

Examples such as the energy consumption of current AI systems highlight the necessity of this aspect.

AI chip makers like Nvidia, Amazon, Google, Microsoft, and Meta are all vying for a piece of the market, but the political climate is also shifting. The recent results of the New Hampshire Democratic primary results new hampshire democratic primary are likely to influence future funding and development priorities, ultimately impacting the trajectory of these cutting-edge AI chips.

Potential Impact on Society

The proliferation of AI chips will profoundly impact society. From autonomous vehicles to personalized medicine, the applications are vast. However, ethical considerations regarding the use of AI must be carefully addressed. Careful planning and thoughtful consideration of potential consequences are essential for responsible deployment of these powerful tools. The ability to control and mitigate biases in AI systems will be critical for equitable outcomes.

Impact on Data Centers

AI chips are revolutionizing modern data centers, transforming their capabilities and efficiency. These specialized processors are designed to handle the massive data streams and complex computations required for artificial intelligence tasks. Their impact extends beyond simple processing speed enhancements, affecting the entire infrastructure and operational workflows within data centers.The influence of AI chips on data center infrastructure is profound.

Their specialized architectures enable significant improvements in power efficiency and cooling requirements. This, in turn, leads to reduced operational costs and a smaller carbon footprint for data centers. Furthermore, AI chips are driving the need for specialized hardware and software to optimize their performance, resulting in a shift towards more sophisticated data center designs.

Role of AI Chips in Accelerating Data Processing

AI chips are specifically engineered to handle the intensive computations needed for machine learning algorithms. Their parallel processing capabilities and optimized architectures significantly accelerate data processing speeds. This acceleration allows for quicker training of AI models, enabling faster insights from data and quicker response times in applications that rely on AI. The impact is particularly noticeable in tasks like image recognition, natural language processing, and predictive modeling.

For example, AI chips can process thousands of images in seconds, enabling real-time analysis and decision-making in applications such as autonomous vehicles and medical imaging.

AI chips from Nvidia, Amazon, Google, Microsoft, and Meta are rapidly evolving, pushing the boundaries of what’s possible. These advancements are fascinating, especially when you consider the parallel trajectory of someone like Chita Rivera, whose career highlights are a testament to the power of dedication and talent. Chita Rivera’s key moments in the arts, though distinct from the tech world, offer a compelling comparison to the groundbreaking innovations in AI chip design.

Ultimately, both fields demonstrate human ingenuity at its finest, and both are shaping our future.

Reshaping Data Center Operations

AI chips are reshaping data center operations by enabling new functionalities and workflows. Their incorporation is driving a paradigm shift from traditional server farms to more specialized, efficient, and AI-centric data center environments. This includes implementing AI-powered tools for tasks such as predictive maintenance, optimizing energy consumption, and automating routine operations. For instance, AI algorithms can predict potential hardware failures, proactively addressing issues before they cause downtime.

These improvements not only increase uptime but also reduce operational costs.

Impact on Data Center Components

The adoption of AI chips necessitates adjustments across various data center components. This includes modifications to the hardware, software, and cooling systems. The table below highlights the impact on different data center components.

| Data Center Component | Impact of AI Chips |

|---|---|

| Hardware | Specialized hardware, such as custom AI accelerators and high-bandwidth interconnects, becomes crucial. This specialized hardware is designed for high-throughput data movement and complex computations. |

| Software | Optimized software frameworks and libraries are essential for efficient utilization of AI chips. This includes specialized programming languages and tools for developing and deploying AI models. |

| Cooling Systems | Increased computational power from AI chips generates more heat, demanding more sophisticated cooling systems to maintain optimal operating temperatures. This may involve liquid cooling or other advanced cooling technologies. |

| Networking | Higher bandwidth and lower latency networking infrastructure is needed to support the high-speed data transfer requirements of AI workloads. |

Ethical Considerations: Ai Chips Nvidia Amazon Google Microsoft Meta

The rapid advancement of AI chips presents exciting opportunities but also necessitates careful consideration of the ethical implications. As these powerful tools become more integrated into our lives, we must proactively address potential biases, ensure responsible development, and establish robust ethical guidelines to mitigate harm. Ignoring these considerations could lead to unintended consequences and exacerbate existing societal inequalities.

Potential Biases in AI Systems

AI systems trained on biased data can perpetuate and amplify existing societal prejudices. This is especially true for AI chips, which process vast amounts of data at incredible speeds. If the training data reflects historical inequalities, the resulting AI models will likely inherit and amplify those biases. For instance, facial recognition systems trained predominantly on images of light-skinned individuals may perform less accurately on darker-skinned individuals.

This can lead to discriminatory outcomes in areas like law enforcement and security. Furthermore, biases in data can affect various aspects of AI, from loan applications to hiring processes, leading to unfair and unjust outcomes.

Importance of Responsible AI Development and Deployment

Responsible AI development and deployment requires a multi-faceted approach. It necessitates careful data curation and selection to mitigate bias. Transparency in the algorithms and models is essential to understand how decisions are made and identify potential vulnerabilities. Furthermore, robust testing and validation procedures are crucial to ensure fairness and accuracy across diverse populations. The AI chip industry needs to prioritize responsible design and development to ensure fairness and mitigate the potential for harm.

Need for Ethical Guidelines in the AI Chip Industry

Establishing clear ethical guidelines for the AI chip industry is crucial. These guidelines should address data privacy, algorithmic transparency, and the potential for bias amplification. The guidelines should be developed collaboratively by industry stakeholders, policymakers, and ethicists. This collaborative effort is vital to ensure that AI chip development aligns with societal values and minimizes potential harms. Examples of ethical guidelines could include provisions for data anonymization, fairness audits of AI models, and mandatory reporting of biases.

Potential Ethical Dilemmas Related to AI Chip Use

The widespread adoption of AI chips raises several ethical dilemmas. One major concern is the potential for autonomous weapons systems. AI-powered systems capable of making life-or-death decisions without human intervention pose significant ethical challenges. Moreover, the use of AI chips in surveillance technologies raises concerns about privacy violations and potential misuse. The potential for manipulation and misinformation through deepfakes is another significant ethical challenge.

Another potential ethical dilemma is the displacement of human labor due to automation powered by AI chips. Addressing these concerns requires a thoughtful and proactive approach. For example, international agreements on the use of AI in warfare could help mitigate the risk of autonomous weapons systems.

Examples of Ethical Dilemmas in Specific Applications

AI chips are being integrated into numerous applications, each presenting unique ethical considerations. For instance, in healthcare, AI-powered diagnostic tools must be rigorously tested and validated to ensure accuracy and fairness across different patient demographics. In autonomous vehicles, the ethical dilemmas regarding accident scenarios, where the AI must decide who to prioritize, are particularly complex. Furthermore, the use of AI in finance raises concerns about algorithmic bias in loan approvals and investment decisions.

These specific examples highlight the importance of careful consideration of ethical implications in every application of AI chips.

Epilogue

The AI chip market is dynamic and rapidly evolving, with Nvidia, Amazon, Google, Microsoft, and Meta leading the charge. These companies are shaping the future of computing, and their innovations will continue to impact various industries. The future of AI is undoubtedly intertwined with the development and deployment of these advanced chips.

User Queries

What are the key differentiating factors between AI chips from different companies?

Each company’s AI chips have unique architectures, processing capabilities, and power efficiency tailored to specific needs. Some excel in specific tasks like image recognition, while others prioritize general-purpose computing. Features like custom instructions and optimized software libraries further differentiate the offerings.

What is the predicted growth trajectory of the AI chip market?

The AI chip market is projected to experience significant growth in the coming years, fueled by the increasing demand for AI applications across various industries. The growth is particularly driven by the need for faster and more efficient processing of data in large-scale data centers and AI-powered applications.

What ethical concerns surround the widespread adoption of AI chips?

Bias in algorithms, the potential for misuse, and the need for responsible development and deployment are key ethical considerations. Transparency, accountability, and guidelines are crucial to mitigating potential harms.